One thing I didn't expect when I started using AI seriously was how quickly it would expose my own thinking. Not improve it—expose it.

Before AI, you could hide weak thinking for a long time. You could compensate with sheer effort, polish, or just staying at your desk until you were too tired to care. You could drown uncertainty in complexity and extra words. Because the work took so much time to produce, the logic behind it was hard for anyone—including yourself—to really inspect. AI changed that almost overnight.

Speed removes the camouflage

When something takes days or weeks to finish, it’s easy to confuse being busy with being clear. You feel productive because you’re exhausted. But AI removes that camouflage.

When you can generate ten different versions of an idea in minutes and none of them feel right, you can’t blame execution anymore. The problem isn’t your speed; it’s your direction. You didn’t lack the right tools; you lacked a point of view. It’s a deeply uncomfortable realization, but it’s an honest one.

Why AI feels so threatening

I think a lot of the backlash against AI isn't actually about jobs or “real” creativity. It’s about exposure. AI collapses the distance between having a thought and seeing it on a screen. When that distance disappears, there’s nowhere left to hide fuzzy reasoning or borrowed opinions.

If you don't know what you actually want, AI just reflects that uncertainty back at you. It gives you a hundred reasonable options that lead nowhere. That doesn't feel like help—it feels like a judgment on your indecision.

Strong thinking wants friction

I’ve noticed that when someone with real judgment uses AI, the whole interaction changes. They aren’t asking, “What should I think about this?” They’re saying, “Here is what I think—now tell me where it breaks.”

They bring a direction, a bias, and a rough conclusion to the table. AI doesn't replace those things; it sharpens them. It finds the weak spots and missing context. The output improves only because the input had a spine to begin with.

Weak thinking wants the AI to provide certainty.

Strong thinking wants the AI to provide friction.

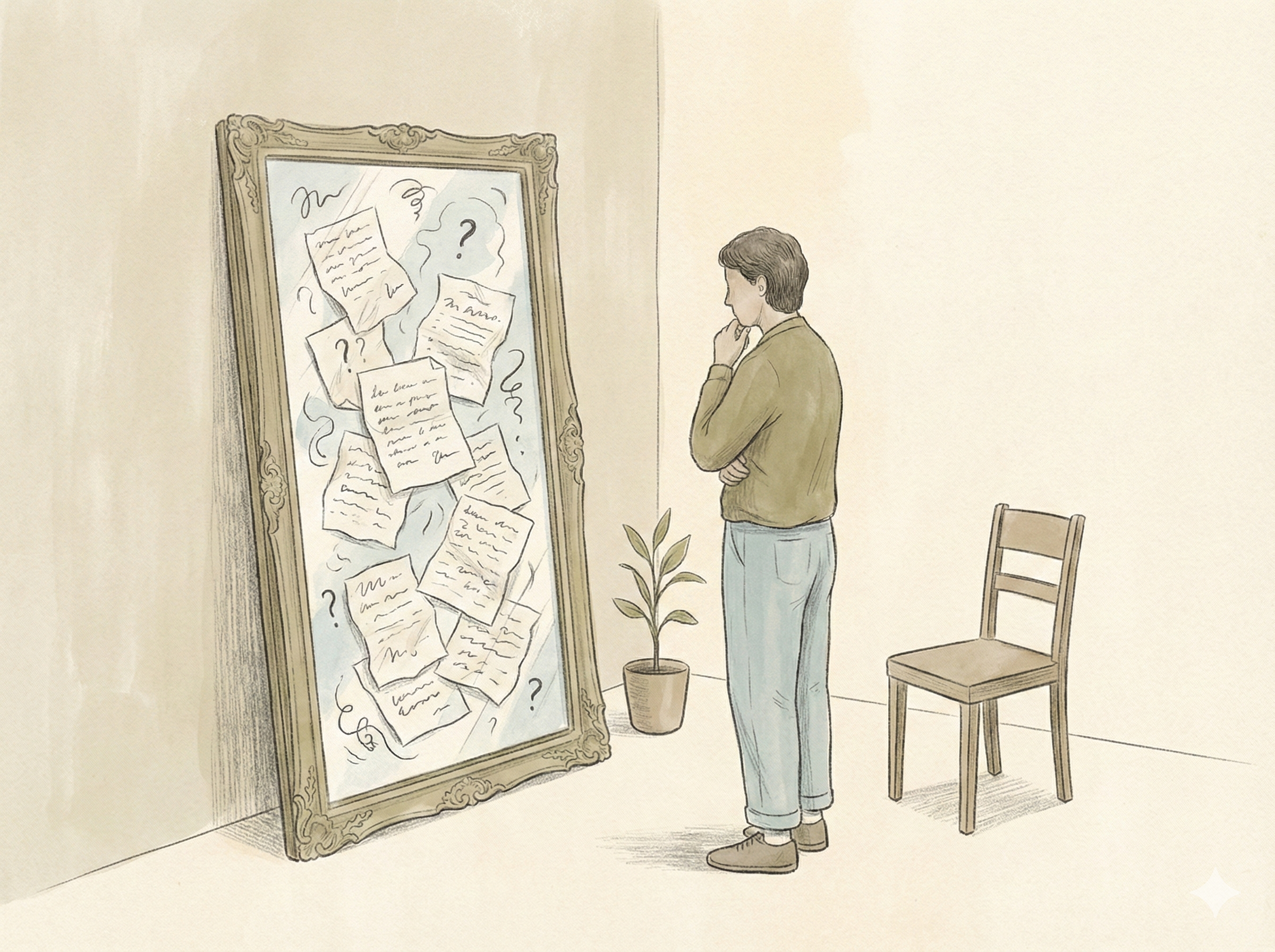

The mirror effect

I've realized that AI is a mirror, not a mind. If you bring vague goals, you get generic output. If you bring borrowed opinions, you get recycled language. But if you bring clarity, you get leverage.

That’s why two people can use the exact same prompt and walk away with completely different results. The difference isn't prompt engineering—it’s the quality of the thought that existed before the prompt was even typed. AI doesn't create insight; it reveals whether you had any to begin with.

Learning to sit with the reflection

I still hit plenty of moments where AI feels totally useless. But when that happens now, I try to treat it as a signal instead of a failure of the tech. It usually means I haven’t decided what matters yet, or I’m trying to avoid a hard trade-off.

When I slow down and do that internal work first, the AI suddenly becomes helpful again. Not magical—just helpful.

Final Thought: AI doesn’t reward intelligence as much as it rewards clarity. It exposes weak thinking early so you can actually build something strong later. The tool isn’t judging you, but it is removing the delay between confusion and feedback. Once that delay is gone, the only thing left to improve is the part that can’t be automated: how clearly you think before you ever ask for help. I’m still learning how to look in that mirror without flinching.